Difference between revisions of "project01:W12022G3P4"

(→Computer Vision) |

|||

| Line 47: | Line 47: | ||

== Computer Vision == | == Computer Vision == | ||

| − | + | In the Computer Vision session, we explored using scripts to deal with image-related issues. By calling different kinds of libraries, different functions can be realized through the script like showing, reshaping, and applying filters to an image. We also get a better understanding of how the computer reads an image, in an approach that is very different from how humans perceive it. | |

| + | |||

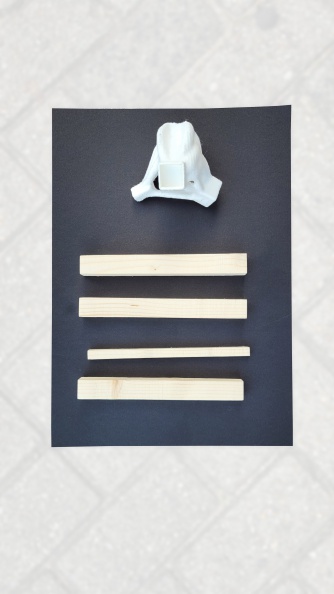

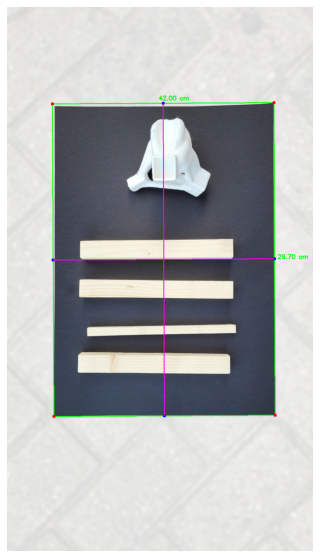

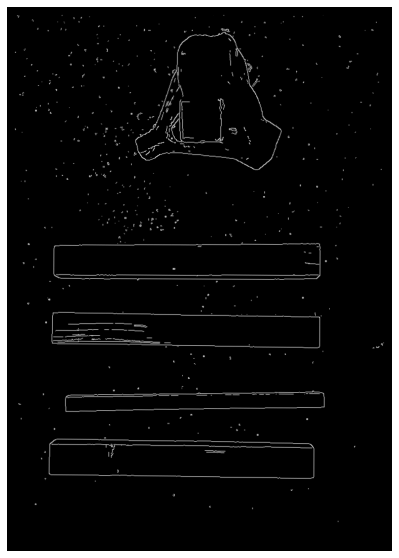

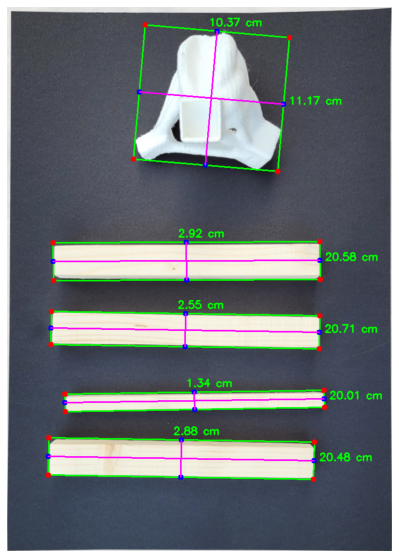

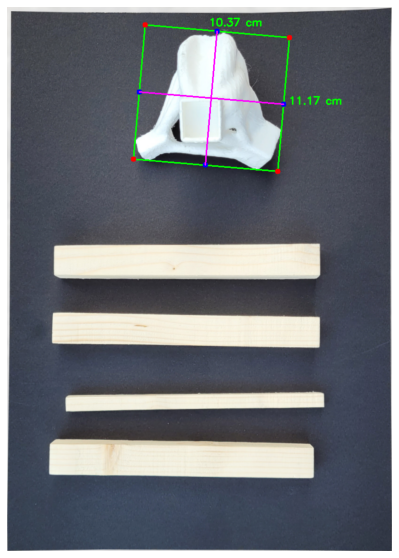

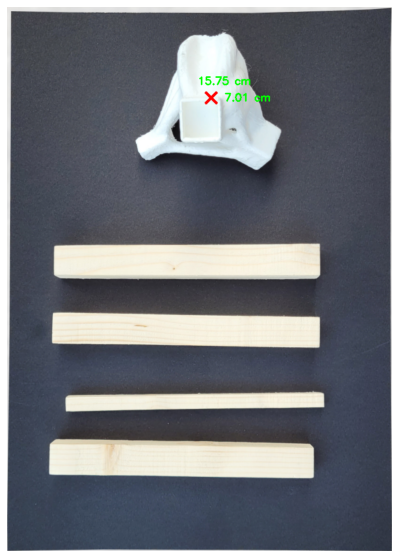

| + | The Python script developed in the CV session could be used to create the visual link between the node members and the robotic arm, which is crucial for the later HRI part. The photo of the node and beams placed without overlapping each other would be taken as an input. The script is able to detect the boundary of the background table and the boundaries of each member. With the measurement of the actual table as an input, the pixel per metric transformation would tell the computer the relative size of the other members. The target member then could be selected based on its size. or based on the coordinate of the centric point of each member. | ||

| + | |||

| − | |||

Revision as of 16:58, 4 April 2022

Group 3: Fabio Sala